My First Real AI Nervous Moment

I want to be clear about something before I say anything else.

I’m not an AI pessimist.

I’ve never been an AI pessimist.

I’m usually the one saying, relax—every new technology scares people at first. Electricity, the printing press, the internet, nuclear power.

Same pattern every time. Real risks, huge upside, and in the end, the apocalyptic nightmare situations never happen.

So when I say something made me nervous this morning, I mean actually nervous. For the first time.

I talked about this last week, but it mattered enough that I devoted an entire podcast episode to it. I’ll break it down here.

Here’s what happened, to recap.

Altman’s $250M Bet

OpenAI—the company behind ChatGPT, which roughly a billion people now use—just invested $250 million into a brain-computer interface company called Merge Labs.

That sentence alone should make you pause.

Not because brain-computer interfaces are new. Neuralink has been around. Elon Musk talks about it all the time.

What made me stop and think was this:

OpenAI just raised $20 billion.

Companies usually raise money because they need money.

They don’t usually raise money and immediately deploy a quarter-billion dollars into another company—especially one operating at the boundary between biology, AI, and the human mind.

And there’s another detail most articles I’ve read skipped completely: Sam Altman is a co-founder of both OpenAI and Merge Labs.

Look, these are private companies. People can invest however they want. But when you zoom out, the picture gets… interesting.

The Last Interface: You.

Merge Labs isn’t doing what Neuralink does.

Neuralink puts a chip in your brain. It reads electrical signals from neurons. AI learns the patterns. Thought becomes action.

Merge Labs takes a different approach.

They genetically modify neurons to respond to ultrasound.

Then an external ultrasound device—attached outside your skull—can both read and stimulate those neurons.

No chip. No implant.

Biology talking to AI directly.

At a high level, here’s what that means.

Your thoughts can be translated into digital signals. Digital signals can be translated back into thoughts.

Reading and writing.

And once you have that, the use cases explode.

Medical use cases are obvious. Paralyzed people moving limbs.

But that’s just the beginning.

Inside the Read–Write Mind

Imagine controlling devices with your mind. Thermostat. Phone. Computer. Car.

You can play games without a controller. Noland Arbaugh, Neuralink’s first patient, already does this.

You can send emails without typing. You can communicate brain-to-brain. Literal telepathy—powered by infrastructure.

That’s the mind-reading layer. The mind-writing layer is a little more crazy and not enough people are talking about it.

The same system that can observe thoughts, can also introduce sensory content, images, emotions, or impulses directly into neural circuits.

The bright side is it can restore lost sensory input. For example, it can help a blind person see by telling the neurons what’s in the room. Or it can help deaf people hear music for the first time.

But the boundary between help and influence could get blurry.

Can companies implant thoughts in your mind? Not in the sci-fi sense—yet—but shaping which thoughts surface, repeat, or feel “right” already counts as influence.

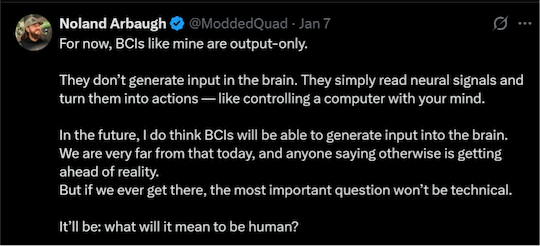

Noland himself has said he believes this is coming.

And then, of course, there’s the AI layer.

Instead of asking ChatGPT a question, you think it. Instead of reading the answer, it arrives as a thought.

Want to respond intelligently in a meeting? The response forms instantly. You want to persuade your boss? The phrasing appears in your mind.

Want to recall history, psychology, strategy, persuasion, negotiation? It’s there, immediately.

It’s Hard Not to Say Yes

And I’ll be honest: if this worked, I’d want it.

Not tomorrow. Maybe not next year. But eventually.

Because someone with this interface is going to think faster, learn faster, respond faster, and operate at a level that makes everyone else feel…

Slow.

That’s the part people don’t talk about enough.

The incentives are overwhelmingly strong for most people to want it.

Opt-out stops being a real option once opt-in creates massive advantage.

But here’s another part that made me pause.

Data, Data, Data

Data is the fuel of AI.

Every large model—ChatGPT, Gemini, Grok—has access to roughly the same public data. Books. Tweets. Reddit. News. Videos.

What differentiates them is what data they get next.

And thoughts are the ultimate dataset.

Unfiltered. Pre-language. Pre-censorship. Pre-performance.

It’s estimated humans think around 60,000 thoughts per day.

Multiply that by millions, eventually billions, of people.

Now imagine an AI learning from that stream continuously.

Every fear. Every desire. Every impulse. Every doubt. Every unspoken idea.

Even if only a fraction is captured, it’s orders of magnitude beyond anything that’s ever existed.

And once that data is absorbed into a model, you don’t get it back.

Ever.

So yes—there are privacy questions. Big ones.

There’s No Undo Button

Sure, there are safeguards that will be promised. Settings. Permissions. Controls.

But the technology has to read your thoughts to be useful. That line—where utility ends and intrusion begins—will be negotiated in real time, and under economic pressure.

I’m not predicting dystopia. I’m not saying thought police are around the corner.

I’m saying this is the first time AI crossed from external tool into internal interface.

From something you use… to something that also uses you on the most intimate level possible.

And that deserves more attention than it’s getting.

Because this isn’t science fiction anymore.

This is capital being deployed.

I’m still optimistic. I’m still excited.

But for the first time, I’m also nervous.

And that’s why I’m digging deeper into brain-computer interfaces—how they work, who controls them, and what happens when intelligence stops living outside us and starts living inside us.

Because once that shift happens, there’s no undo button.